Colour is part of one of our most complex senses and is one we each have a dynamic and unique relationship with, both physiologically and mentally.

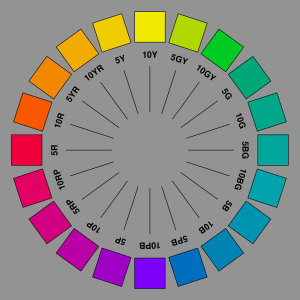

Albert Munsell was passionate about portraying colour theory in a way that didn’t focus on the mathematical values for colour but rather the specific way in which the human eye and brain perceives colour; something he often likened to the meaningful and harmonious way in which we communicate with and process music. This philosophy particularly resonated with me and inspired me to delve deeper into human sensory cognition, particularly the connections that lie within our visual and auditory cortex.

Colour’s integration and role within every day life sometimes makes it difficult to consciously pin down what colour means and does to you. When I started this project I became aware of how many of us had never thought about how a colour influences them, makes them feel or the sensory associations it holds within their memories. However, when it comes to our other primary sense, sound, this became a question more easily understood and answered. We, as a culture, primarily consume music in an emotional and perceptual way, commonly using it to express ourselves, change our mood and productivity levels or as a powerful tool for reminiscing.

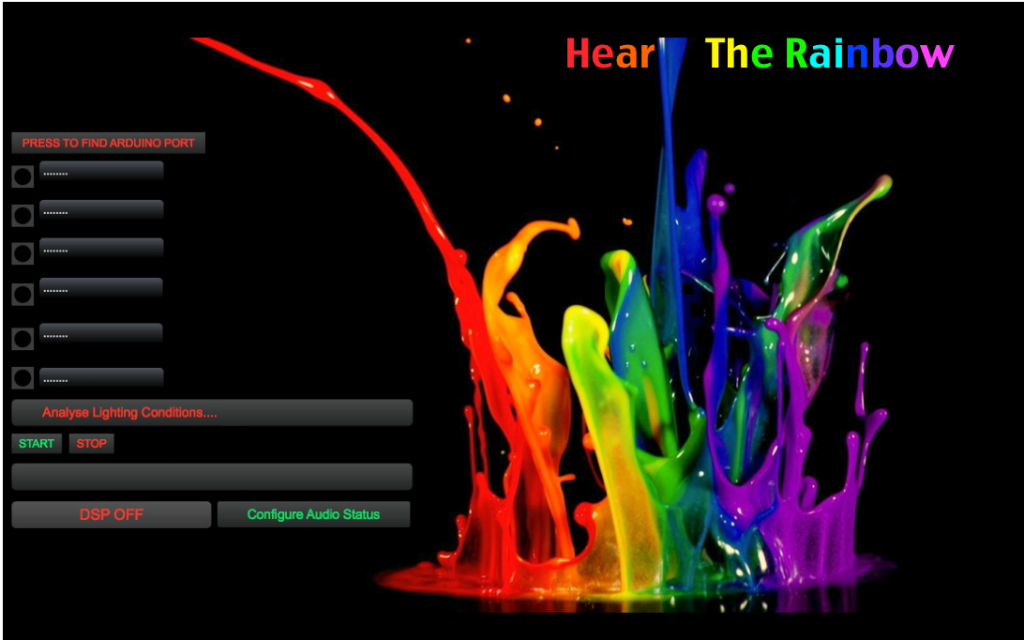

Hear The Rainbow is a sensory experience that provides a platform for exploring the perceptual relationship we have with the HSL 3 dimensional colour space by redesigning it in a familiar and emotive way; through sound.

Human Sensory Perception

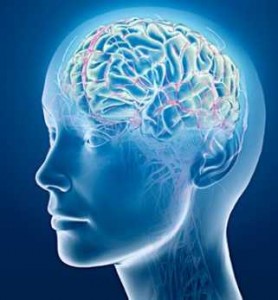

The way in which we respond to sensory information such as colour and light, depends highly on our own personal process of cognitive mediation and perceptual processing.

Consider an alien robot who has been designed with eyes to replicate that of a human, after being placed on earth for the first time, its capable of converting light energy into electrical impulses but, without the ability to gather relevant meaning and comparatively analyse the source and data, the light remains visual noise and the robot is incapable of acting accordingly.

Consider an alien robot who has been designed with eyes to replicate that of a human, after being placed on earth for the first time, its capable of converting light energy into electrical impulses but, without the ability to gather relevant meaning and comparatively analyse the source and data, the light remains visual noise and the robot is incapable of acting accordingly.

To form this type of meaningful perceptual processing, our senses work in parallel, intertwining to create a multimodal cognitive system; predominately requiring internal data from both the primary and secondary (auditory and visual) senses to identify physical objects and their attributes (Haverkamp, 2009). The perceptual system generates a model of the physical stimulus based on the information gathered from each of the senses, eventually triggering cognitive imagery. By segregating the information into its correlating features, this model forms what’s called a perceptual object (Haverkamp, 2009).

To recognise this object as familiar and form a logical emotional and physiological response, it’s essential that multi-modal correlations are made that link the acoustic features to visual ones, for example a hollow tone to the geometry or material of an object, enabling us to understand what a brown, wooden box sounds like (Haverkamp, 2009). Often referred to as a process of indexing, both senses will work in unison in an attempt to reference an object. If an object is not known, we simply turn our visual attention to the perceived source and basic audio/visual correlation’s take place (Haverkamp, 2009). This initial cross-correlation forms a primary perceptual object and hence a sensory memory. These memories are stored as a sort of poly modal “file”; saving all of the features from each sense involved when a perceptual object is first formed, thus, enabling the full cross sensory image to be re-triggered in the future from any of the relating senses (Haverkamp, 2009).

Alongside these initial interactions our emotive response is paramount at denoting the type of future associations we will hold. As stimuli are perceived, our emotions will run on two different levels. Primary emotions are formed by innate physiological reactions, governed by the hypothalamus and limbic systems our body condition will change as a result of our situation. Intrinsic from birth, they form the basis of our core emotions such as fear, happiness and sadness (Haverkamp, 2012). Secondary emotions are interlinked with our perceptual system, as the stimuli are being indexed against previous experiences, our physical condition is evaluated and we are able to discern whether the stimuli, for example, poses a threat due to an elevated heart rate and auditory markers such as; unusual loudness compared to our referenced perceptual object (Haverkamp, 2012). These emotional responses are from then on part of the multi-modal file, although fundamentally designed to protect us from harm, they determine our polarised relationships to the outside world, including colours.

Although many of these cross-sensory analogies are due to basic everyday correlations i.e looking at the sea and hearing the sound of the waves, our cultural upbringing and personal experiences also influence the types of links we make (Haverkamp, 2009). For example, in the western world mourning outfits are mainly black, whereas in India predominately white is worn, demonstrating the different kind of emotive and lingual associations the two cultures may use to describe either colour (Empowered By Color, 2015).

Although many of these cross-sensory analogies are due to basic everyday correlations i.e looking at the sea and hearing the sound of the waves, our cultural upbringing and personal experiences also influence the types of links we make (Haverkamp, 2009). For example, in the western world mourning outfits are mainly black, whereas in India predominately white is worn, demonstrating the different kind of emotive and lingual associations the two cultures may use to describe either colour (Empowered By Color, 2015).

Symbolic, emotive and somatic associations are used heavily in the production of music, art and even found the basis of colour psychology, which looks to combine the physiological affect of coloured light with common associations to enable us to understand the impact of colour in our lives. For example; how colour can improve productivity, concentration, promote calmness or encourage mental stimulation.

A rainbow coloured sound wave.

Whilst these intermodal analogies occur on a daily basis across our five senses, the link between audio and visual, more importantly, sound and colour, has been one inherently discussed within art and science for centuries. In the 1600‘s, Sir Issac Newton made some of the primary comparisons between the visible spectrum of light and the musical scale (Gage, 1999). Within this era, the bond between colours and their harmonious musical affiliates was readily discussed, founding from the developments within both optics and artistic colour theory. Moreover, synaesthesia, a phenomenon described as an “involuntary psychological mechanism by which two sensations are simultaneously triggered by the same stimulus” (Gage, 1999), is most commonly found within these two modalities. As cognitive science has developed, synaesthesia and its neurological functionality is suspected to be the result of a cross ‘wiring’ of sensory pathways, triggering auditory data to be partly processed within the visual cortex and visa versa (Nunn et al., 2002). However, it’s difficult to confirm this due to the often erratic and highly personal nature of the correlations.

This phenomenon affects just 4% of the UK population (Nhs.uk, 2015), but when analysing the cognitive research into the average person’s cross-sensory perceptual abilities, it’s not unreasonable to suggest that we all have an inherent level of Synaesthesia lying within our memories and the unavoidable interactions with the organic world. Wether we are aware of this or not, is a different statement.

Hear The Rainbow

Hear the rainbow uses this core concept of human sensory perception to provide a synaesthetic experience for the everyday person; turning colour into sound by emulating the way our brain and body processes colour. The predominant 9 Hues (Red, Orange, Yellow, Green, Light blue, Dark Blue, Indigo, Violet, Pink) and 3 shades (Black, White, Grey) are redesigned as sounds, each mimicking the properties and affects that colour has.

We have all been equipped with sonic and visual training of environmental and organic data relationships, having formed hundreds of multi sensory associations over the course of our lives. By taking advantage of these inherent sensory associations, the system looks to produce an experience that is inclusive to all ages, backgrounds and musical abilities, whilst, actively showing the links we use on a daily basis in a tangible, relatable and emotive way.

Not only can it help the everyday person understand and explore the way we perceive colour and sound but it can be a platform for a brand new sensory experience for those who are colour blind, helping them to understand the affect colour has upon our brain, body and life. With proper development the aim is to produce a model suitable for those with learning disabilities, harnessing the power that interactive sensory experiences, especially ones which combine our two most powerful senses; vision and sound, can have upon those with ASD and other learning disabilities.

Light Blue – The Colour to Audio Transposition

The specific semantic relationships between a hue and its audio equivalent were generated by using key research within colour psychology, colour’s physiological affect on the body and the western world’s physical and symbolic associations of colour. The most common associations from each discipline were used and these factors were then translated into appropriate acoustic parameters, themes and ideologies, forming the inspiration and foundations for each sound’s production.

Take Light Blue as an example; physiologically blue has a profound effect on the body and mind. When processed by the eye its refracted and pushed back into our perception (Morton, 2015), when it reaches the blue receptive cones, their slow response time makes it the wavelength that is noticed last within day time conditions (Van Scoy, 2002). This slow, relaxing and distant quality is further corroborated when looking at external organic factors such as Rayleigh scattering; a type of light scattering that is highly effective upon short wavelengths such as blue (Hyperphysics.phy-astr.gsu.edu, 2015). It forms the appearance of the blue sky and generates that sense of infinite space and freedom we associate with light blue.

Despite its common use to promote calmness and relaxation, blue light is a powerful awakening tool used by our circadian system, something which dates back to our aquatic evolution.

As light enters our eye, it acts upon two different pathways, the optic tract and the retinohypothalamic tract, which begins with melanopsin cells found within the retina (Holzman, 2010). From these cells, 60% of the light energy is taken to our endocrine (hormone), limbic (fear) systems and cerebral cortex, controlling everything respectively from body temperature, heart rate and cognitive functions including memory and analytic thought (Holzman, 2010).

As light enters our eye, it acts upon two different pathways, the optic tract and the retinohypothalamic tract, which begins with melanopsin cells found within the retina (Holzman, 2010). From these cells, 60% of the light energy is taken to our endocrine (hormone), limbic (fear) systems and cerebral cortex, controlling everything respectively from body temperature, heart rate and cognitive functions including memory and analytic thought (Holzman, 2010).

The other 40% is processed by our circadian system, controlling our body clock. With Melanopsin cells having a peak sensitivity of 459-485nm, the circadian system is highly responsive to blue light, this sensitivity in turn suppresses the secretion of a crucial sleep aiding hormone Melatonin and hence, stops us from sleeping easily (Van Scoy, 2002).

When our brain functions within different states of consciousness, it resonates at a particular frequency – called our brain wave function. This frequency is one that can be altered by particular binaural beating frequencies, a method commonly used within meditation. To tap into our Alpha wave function (used in conscious, yet meditative, alertness), a beating frequency that falls within top end of the 8-12Hz Alpha range is found within the sound for light blue, allowing for the replication of this complex physiological effect blue light has upon our brain. (Holzman, 2010)

When our brain functions within different states of consciousness, it resonates at a particular frequency – called our brain wave function. This frequency is one that can be altered by particular binaural beating frequencies, a method commonly used within meditation. To tap into our Alpha wave function (used in conscious, yet meditative, alertness), a beating frequency that falls within top end of the 8-12Hz Alpha range is found within the sound for light blue, allowing for the replication of this complex physiological effect blue light has upon our brain. (Holzman, 2010)

The more organic and cultural associations of blue commonly lie within the sky, the ocean, water, feelings of tranquility, calmness, yet often, melancholiness and coldness (Eiseman, 2000). Drawing upon these key themes, light blue was designed using high pitched melodic elements to represent the natural spatial associations we have with height, lightness of colour and high pitched sounds (Walker and Ehrenstein, 2000).

As well as the resonant tone, the sporadic repetitiveness, movement and spatial qualities of these sounds help generate ideologies of water droplets, space and the refraction of light upon the sea’s water. By using a specific musical scale for these sounds, a more melancholy feel is produced, replicating the sometimes emotionally cold associations found with Light Blue. Furthermore, organic sounds that mimic the ebbing and flowing of the oceans waves upon a beach instill cognitive and emotive imagery that we all have within our mind.

How Does It Work?

The system uses the parameter mapping sonification technique functioning upon a Hue Saturation and Lightness colour space. Hue affects the timbre (type) of the sound, lightness is used to adjust the pitch of the sounds and saturation alters the spectral spread of the sounds (a low saturation value results in the higher frequencies being filtered out; reducing the spectral spread and producing a muted and dull quality).

When the Hue degree lands upon an intermediate colour such as a Bluey-Green, the colour sounds are crossfaded into each other by using the hue degree value to provide relative volume levels for the 2 hues. The same effect is present when saturation dips below 15, meaning that not only would the colour sounds be very muted but the sound for grey would begin to play alongside the other Hues. Similarly within lightness, alongside the increase or decrease in pitch of the sound, White and Black will begin to cross fade into the mix when lightness goes above 95 or below 10, respectively.

An example would be a HSL reading of 10, 100, 5: Where Red and Orange would both play at 50% volume, each at a very low pitch but with NO muted effect. This would then be cross-faded with Black at 50% volume each, to produce the sound of a Black Red-Orange.

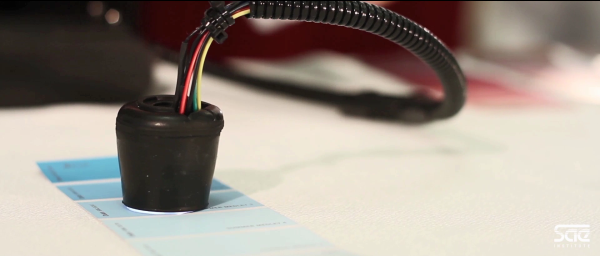

All sounds and effects processing units were designed within visual programming space Pure Data, no recordings/samples/midi/plugins etc. were used. The hardware system is created around Adafruits TCS34725 RGB colour sensor, a few additional LEDs, a black out casing to reduce the anomalies created from ambient lighting and an Arduino UNO micro-controller. HSL readings are fed into the Hear The Rainbow Mac Os Application, processing the sounds in real time and creating the interactive experience.

Hear The Rainbow’s hardware in use

Additionally the system works within two modes – day time or night time, much like our eye. Through hacking into the computer’s webcam, the rooms lighting conditions are analysed, determining whether your surroundings meet the luminance values for a day time or night time condition. From this, the time it takes for each sound to fade in and reach the targeted volume is altered according to the human eyes temporal response to that colour during either photopic (day time) or scotopic (night time) vision, respectively. For example; during night time, our vision is managed by the rods within our eyes, rods contain rhodopsin which gives them a peak reaction to short wavelengths, in particular 500nm (bluey-green). Whereas, within light conditions, our overall sensitivity peaks with longer wavelengths such as red and orange (Van Scoy, 2002). To represent this within sound, a blue colour would have a longer fade in time than a red sound during the day time and visa-versa within a night time setting.

The system is currently within its prototype stages with development on-going!

Watch Hear the Rainbow Work

Video showcasing “Hear the Rainbow”:

This film was produced in conjunction with SAE Institute. Video production: Rodeax LTD Sound Mix: Ludovic Morin

Video: Listen To The Rainbow at 100% Saturation and 50% Lightness:

All sounds and effects processing were generated within visual programming space Pure Data, no samples/recordings/midi presets/plug ins were used in the making of this project. All sounds are owned by myself. Hue colour samples used are at 50% lightness and 100% saturation.

Other work and contact details can be found here: http://www.sophiekirkham.com/

Bibliography

Eiseman, L. (2000). Pantone guide to communicating with color. Sarasota, Fla.: Grafix Press.

Empowered By Color, (2015). Cultural Color. [online] Available at: http://www.empower-yourself-with-color-psychology.com/cultural-color.html [Accessed 11 Aug. 2015].

Gage, J. (1999). Color and meaning. Berkeley: University of California Press.

Haverkamp, M. (2009). Look at that sound! Visual aspects of Auditory Perception.

Haverkamp, M. (2012). VISUAL REPRESENTATIONS OF SOUND AND EMOTION. In: IV International Conference Synesthesia: Science and Art.

Holzman, D. (2010). What’s in a Color? The Unique Human Health Effects of Blue Light. Environ Health Perspect, 118(1), pp.A22-A27.

Hyperphysics.phy-astr.gsu.edu, (2015). Blue Sky and Rayleigh Scattering. [online] Available at: http://hyperphysics.phy-astr.gsu.edu/hbase/atmos/blusky.html [Accessed 25 Aug. 2015].

Nhs.uk, (2015). Synaesthesia – NHS Choices. [online] Available at: http://www.nhs.uk/Conditions/synaesthesia/Pages/Introduction.aspx [Accessed 12 Aug. 2015].

Nunn, J., Gregory, L., Brammer, M., Williams, S., Parslow, D., Morgan, M., Morris, R., Bullmore, E., Baron-Cohen, S. and Gray, J. (2002). Functional magnetic resonance imaging of synesthesia: activation of V4/V8 by spoken words. Nat. Neurosci., 5(4), pp.371-375.

Van Scoy, F. (2002). The Physics, Physiology, and Psychology of Sight.

Walker, B. and Ehrenstein, A. (2000). Pitch and pitch change interact in auditory displays. Journal of Experimental Psychology: Applied, 6(1), pp.15-30.

Sophie Kirkham is an Audio Engineer who graduated with First-Class Honours in her Bsc at SAE Institute Oxford. After completing her upcoming masters in computer science, Sophie aims to progress her professional career within audio software development to develop both music software, multi-sensory displays and hardware for those with physical and mental disabilities. Currently her work within audio, primarily post production, has led her to work for the likes of Legoland, Thorpe park and the British Arts Council.

Her interest in colour was inspired by the early theories of musical harmony and its correlation to the visible light spectrum. Following extensive research into cognitive perception and processing, this affiliation with colour and the visual cortex became a focal point in her recent piece of interactive audio programming. The Hear The Rainbow project encompasses her passion of audio, colour, sensory perception and cultural studies to produce a synaesthetic sensory experience that turns colour into sound by emulating the way our body and mind processes colour.

To view her work and contact Sophie about her Hear The Rainbow Project, visit her website http://www.sophiekirkham.com/ or contact her @SophKirkham on Twitter.

would this process be used in reverse order in order to translate sound into Color?